The AI sidekick

By an engineering who found an unlikely help in an AI tool named Cursor.

The “Just ship it” chaos

Picture this: It’s release eve, and your team Slack is exploding.

Dev1: “Anyone else seeing a null pointer in the payment service? Happens only on Fridays!”

Dev2: “🤦♂️ Here we go again… I’ll add more logs.”

QA: “The front-end shopping cart button randomly stopped working too.”

Product Manager: “We have to release in 2 hours. Just ship it and pray!”

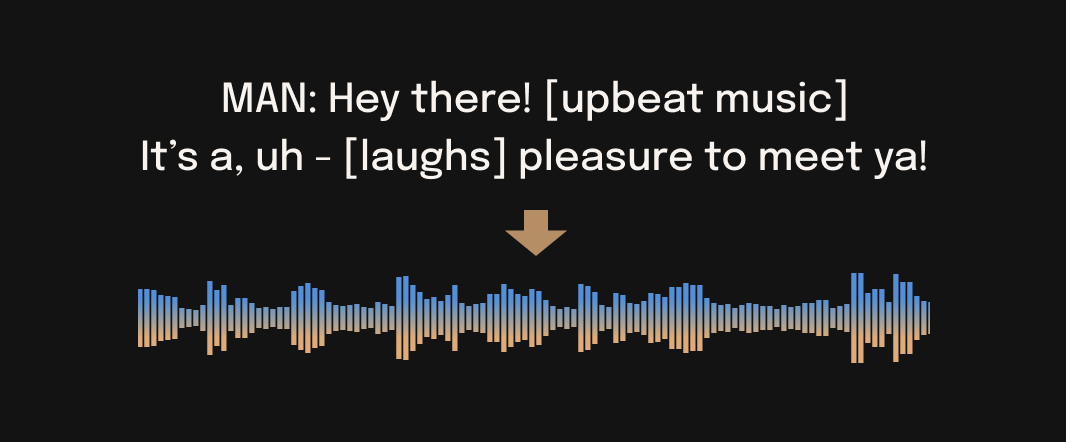

Sound familiar? Endless Slack threads, mystery bugs popping up at the worst times, and the classic last-minute “just ship it” rush. As an engineering manager, you juggle these fires while pushing the team to deliver features on time without burning out. It often feels like you need a superhero sidekick who can be everywhere at once – reviewing backend code, tinkering with frontend UI, double-checking tests, and maybe even helping with that new AI project your CTO is excited about.

What if I told you that sidekick exists, doesn’t sleep, and writes code? No, not an over-caffeinated junior dev – I’m talking about Cursor, an AI-powered development tool. In my journey from skeptic to advocate, I discovered that Cursor isn’t just another dev tool; it’s a power tool for engineering teams. It has helped us accelerate delivery, catch bugs early, reduce dev friction, and streamline workflows across backend, frontend, and even machine learning projects.

Let’s rewind and walk through how I discovered Cursor’s potential across the development lifecycle, and how it turned our “just ship it” chaos into a smoother, saner process.

Meet Cursor

When I first heard about Cursor, I thought it was just for prototypers or solo hackers vibing on new ideas. (Even some of my devs assumed Cursor and tools like Claude were only useful for quick prototypes or toy apps.) I quickly learned how wrong we were. Cursor is an AI-enabled IDE that acts like a smart pair programmer. It uses powerful large language models to assist with coding tasks. In fact, the latest Claude model can handle a 100k token context (novel-length input) , meaning it can ingest and reason about your entire codebase when needed.

Unlike a normal code editor or simple autocomplete, Cursor can actually read and write files, run tests, and perform multi-step tasks autonomously. You chat with it or give it goals, and it writes code and even executes it. Under the hood, Cursor’s AI agent will iteratively draft code, run commands, check results, and refine its work until the goal is met. It’s like having a junior developer who can read all your project docs in seconds and tries 100 solutions without complaining.

Importantly for me, Cursor isn’t just about churning out code faster – it’s about improving the whole dev workflow. Let me illustrate through my story: a chronological journey of adopting Cursor across backend, frontend, and ML projects in our team.

Act I – Taming a backend beast

Setting: a critical backend API endpoint is failing intermittently. Developers are stumped and a release is on the line.

As the EM, I usually jump in to facilitate debugging sessions, maybe crack open the debugger or scour logs. This time, I decided to see what Cursor could do. I opened the project in Cursor (which had our whole repository indexed and ready), navigated to the suspect function, and simply asked our new AI assistant: “Why might this function be updating a field occasionally?”

Within seconds, Cursor’s chat panel analysed the code and highlighted a possible issue: an unchecked SQL behaviour that could update fields under a rare condition. It was spot-on. No stack overflow searches, no hours in the debugger. Just a straightforward explanation from the AI of what could be wrong, based on the code context.

Encouraged, I then asked Cursor to write a unit test to reproduce the bug scenario. It generated a concise test targeting that edge case (the AI even guessed the intended behavior from context and our docs). We ran the test – it failed (as expected) – and then I instructed Cursor to fix the function. Cursor jumped into action: it edited the code to handle the case, ran the test again, and passed it, all in one go. 🎉

This was my first “holy smokes” moment. Cursor had just saved us from a late-night debugging marathon. The key was letting the AI follow a tight edit-and-test loop: write code, write test, run test, fix bugs, repeat. By having the AI run the code and tests immediately, it caught its own mistakes early – effectively acting as both coder and initial tester. As one guide on using Cursor puts it, “you typically want the AI to write the code, write the test, and then execute this test while fixing any bugs it finds”. In our case, that meant identifying a bug that had eluded our human eyes, without even cracking open a traditional debugger.

Act II – Frontend firefighting

Frontend issues often feel like whack-a-mole – a CSS quirk here, a state management bug there. One of our frontend devs was wrestling with a mystery UI bug: a React component wasn’t updating state correctly and nobody could figure out why the button stopped responding after a few clicks. It smelled like a race condition or a stale state closure, the kind of bug that can eat a day or two of debugging.

I suggested we let our tireless new assistant take a crack. The dev opened the React component in Cursor and asked in plain English: “Why does clicking ‘Add to Cart’ work only the first few times?” The AI combed through the code and promptly pointed out that the component was missing a key dependency in a useEffect hook, causing it not to refresh properly after the first renders. The dev literally face-palmed and said, “I would never have caught that so quickly.” With Cursor’s hint, she added the missing dependency and the bug vanished.

We then went a step further – we had Cursor suggest an improvement to the component. It recommended a small refactor to simplify state management, citing that it would reduce re-renders. The suggestion made sense, so we let Cursor apply the changes. It modified the code (all changes came through as a git-like diff for review, so nothing sneaky), and our dev verified everything still worked. It did, and the code was cleaner.

This experience drove home a point: Cursor is like an ever-vigilant code reviewer that watches for mistakes and optimizations. It doesn’t replace our engineers’ creativity, but it augments their work – especially useful for catching those “D’oh!” mistakes (like a missing effect dependency) early. The frontend fiasco turned into an opportunity for a teachable moment, courtesy of the AI. And it wasn’t lost on our team that we fixed the issue faster than the ongoing Slack back-and-forth on it.

By now, Cursor had flexed its muscles in backend and frontend scenarios. But could it help with our more experimental projects, like the little machine learning pipeline one team was prototyping? I was curious – and a bit hopeful – to see if Cursor could play the role of a data science intern too.

The playbook: Setting up Cursor for team success

Getting value from Cursor wasn’t just about throwing it at problems. It took some deliberate setup and team habit changes. Here are the key tips and tricks we learned (with a dash of EM wisdom) to onboard Cursor effectively:

- Create a “Docs for AI” folder: We set up a special folder in our repo with documentation just for the AI. Think of this as training your AI assistant on your project’s best practices. We included coding style guides, our database schema overview, and example API usage. Cursor uses these docs to understand how we prefer things done. For example, we added a guide like “How to write a unit test in our service” with examples. This paid off when the AI wrote tests that matched our style (naming conventions, using our custom utils, etc.). Essentially, the better you train your AI with project knowledge, the more it behaves like a seasoned team member.

- Enable the AI test loop (and embrace TDD): I encourage developers to use Cursor’s edit-test loop religiously. That is, whenever the AI writes new code, have it immediately write a corresponding test and run it. Cursor can be instructed to run your test suite or specific tests right after generating code. With a proper setup, it will iterate until tests pass. This catches bugs early – often moments after the code is written. It’s like having a QA engineer paired with every developer in real-time. One anecdote: a dev prompted Cursor to “implement the login feature and test it”; the AI wrote the code, then ran yarn test on the relevant suite and spotted a mistake in its first attempt, fixed it, and reran the test without bothering us (all thanks to an autonomous test loop). Talk about reducing friction in the dev process!

- YOLO mode – use with care: Yes, it’s actually called “YOLO mode” (You Only Live Once, aptly named). This is Cursor’s setting to let the AI run commands automatically without asking for permission. In normal mode, Cursor will draft code and ask you before applying changes or running anything destructive. In YOLO mode, it takes the wheel fully – writing code, executing it, running tests, installing packages, you name it, in a continuous cycle. This can massively accelerate development because it removes the human-in-the-loop for each step. One of our engineers tried YOLO mode to fix a broken script: he described the issue and told Cursor to run the script and keep fixing errors. It literally debugged and updated the script continuously until it worked, without further prompts. We were floored. That said, YOLO mode is powerful but a bit risky – the AI might do something unintended (hence “YOLO”). We restricted it to our dev environment and added a whitelist of allowed commands. As an EM, I see YOLO mode like a sharp tool: amazing for speed, but you must ensure guardrails (just like you wouldn’t let a junior dev deploy to prod on day one). Used wisely, it can accelerate the team’s workflow by removing needless “OK/confirm” clicks and letting the AI auto-fix trivial issues.

- Leverage project files & checklists: I discovered that Cursor shines when you give it a clear game plan. We started writing Project Files – basically a to-do list or mini-spec in Markdown that outlines a feature’s implementation steps. For example, for a “User Bookmarking” feature, you write steps: create DB migration, add backend endpoint, update UI, write tests for each, etc. Then we let Cursor loose on this project file. It tackled each step sequentially: generating code for step 1, running tests, moving to step 2, and so on. It is almost scary efficient. Also, I have a “project_check.md” file template where the AI goes through our plan and points out any potential issues or missing pieces before we start coding. Think of it as asking the AI “Is my plan complete and does it make sense?” – a second opinion that often catches things I forgot (like “What about error handling for X?”). This idea comes straight from pro users of Cursor. Writing out a clear spec and letting the AI validate or even generate parts of it can prevent missteps early. For EMs, it’s like getting an upfront code review on the design before anyone writes a line of code.

- Establish team-wide Cursor rules: I treat the AI assistant like a new team member who needed onboarding. Cursor allows creating shared rules and preferences (stored in a .cursor directory in the repo) that all devs running Cursor will use. We put in rules about which libraries to prefer, our coding standards, and instructions on how to run our app and tests. By checking these into Git, every developer’s Cursor instance sings from the same songbook. This drastically reduced inconsistencies in AI suggestions. It’s akin to having a linter or CI config – configure once, benefit everywhere. And it gave me, as EM, peace of mind that the AI wouldn’t, say, use a disallowed dependency or do something out-of-policy.

- Coach the Team (and the AI) Continuously: Just like a human hire, the AI gets better with feedback. We made it a habit to do quick retrospectives on how Cursor’s suggestions worked out. If it wrote a confusing bit of code, we’d update our docs or .cursor rules to clarify our expected approach next time. If it performed exceptionally well, we’d save that as an example for others. I even personified our AI in team meetings: “Hey, looks like our AI buddy didn’t know about our new microservice – let’s feed it the docs so it’s up to speed.” This kept the tone fun and reminded everyone that AI is a tool, not a magic solution – it works best when we collaborate with it actively.

By following this playbook, I saw Cursor transition from a cool toy for individual devs into a productivity multiplier for the whole team. I spent less time on tedious boilerplate or debugging, and more on creative problem-solving. It’s not just me saying it; we’ve seen teams claim they can build software 5–30× faster with AI pair programmers like Cursor. My experience was closer to the 5× end of that range on average, but even that is transformative. And crucially, this speed didn’t come at the cost of burnout – if anything, it reduced burnout by catching bugs early and offloading grunt work.

However, using an AI assistant does introduce a new kind of management challenge: you’re now effectively “managing” a non-human team member’s output. This leads to an interesting comparison…

Managing an AI vs. a dev

As an engineering manager, a big part of the job is nurturing junior developers – reviewing their code, giving feedback, and gradually trusting them with bigger tasks. Adopting Cursor felt very similar, except your junior dev in this case is an AI that writes code in seconds and never sleeps. Let’s compare, tongue-in-cheek:

- Speed and stamina: A human might take a day to write that new feature and likely needs coffee and sleep. Cursor (the “AI junior”) cranks out a first draft in minutes and can code at 3 AM without batting an eye. Advantage: AI, for raw throughput and no sleep needed

- Understanding context: A new human hire needs weeks to learn the codebase. Cursor? It can be fed the entire repo and relevant docs upfront. Thanks to its massive context window (Claude can read ~75,000 words of context), it can literally have the whole project in its head. That’s like a junior dev reading the entire wiki and code history overnight. Advantage: AI, in ramp-up speed.

- Creativity and intuition: Here, humans shine. A dev might come up with a clever hack or ask a question that redefines a problem. The AI tends to be very predictable – it does exactly what you ask, sometimes to a fault. It won’t intuit the product vision or challenge requirements; it’s more likely to blindly build what it’s told (even if the idea is flawed). Humans win on judgment and thinking outside the box.

- Mistakes and learning: Both devs and AI will make mistakes, but of different kinds. A dev might not know a certain algorithm and implement something inefficiently. The AI might know the algorithm but mis-apply it if the prompt was ambiguous, or it might produce code that looks correct but subtly isn’t (we’ve seen it occasionally introduce a bug by misunderstanding a spec). In both cases, code review is essential. As the EM or team lead, you must review AI-generated code just like you would a junior’s code – arguably even more thoroughly, since the AI has no real understanding of the consequences. As the Stack Overflow blog succinctly put it, “Generative AI is like a junior engineer in that you can’t roll their code off into production. You are responsible for it.” In other words, the accountability lies with us. I always remind: if AI wrote it, we own it.

- Attitude and feedback: The fun part – the AI has zero ego. It won’t get defensive if you reject its code or tell it to try again. (It might sometimes stubbornly repeat a solution until you rephrase the prompt, but that’s another story.) With devs, of course, we have to be mindful of their growth and confidence. In a way, managing Cursor’s output is easier on the emotions – you can be blunt: “This code is not optimal, redo it,” and you won’t hurt any feelings. On the flip side, the AI won’t learn from intuition or passion; it learns only from what we explicitly feed it (docs, rules, examples). So invest that effort, just as you would in mentoring a human.

The bottom line for an EM: treat AI like a very fast-learning junior developer. Set clear guidelines, review its work, and gradually trust it with more complex tasks as it proves itself. Unlike a human, you can scale an AI assistant across every developer’s machine, so it’s like hiring a whole team of tireless juniors overnight – but only if you manage the process well.

By approaching Cursor as a team member rather than a mere tool, we built a healthy dynamic where developers use it to amplify their productivity, not replace their thinking. It’s a collaboration: the devs provide direction and oversight, Cursor provides speed and breadth of knowledge. In our case, this synergy led to some remarkable outcomes and a happier team.

Conclusion: 5× faster, without the burnout

My journey with Cursor transformed how engineering teams work. What began as an experiment to reduce Slack chaos turned into a new way of building software. We deliver features faster, we catch issues before they hit production, and our engineers spend more time on creative problems instead of boilerplate or bug-hunting. As an EM, I’m no longer stuck in endless status meetings about blockers – many blockers get unblocked by an AI pair programmer working quietly alongside my team.

To wrap up, here are some actionable takeaways if you’re an engineering manager considering Cursor AI for your engineering team:

- Start small, but start – Pick a pilot project (backend, frontend, or anything) and let one or two developers experiment with Cursor. Have them share early wins with the rest of the team. Seeing a bug fix or new feature built in half the time is the best way to get buy-in.

- Onboard the AI – Just like a new hire, feed Cursor your tribal knowledge. Set up a docs folder and .cursor rules with your coding standards, project setup steps, and examples. This upfront investment will pay off when the AI’s output aligns with your expectations.

- Integrate into workflow – Encourage a test-driven approach so the AI is always validating its code. Update your CI to accommodate AI-written tests or scripts. Essentially, make Cursor part of the team’s definition of “done” (e.g., code isn’t done until Cursor’s suggested tests pass).

- Use YOLO mode for iteration – For internal tools or non-critical work, try YOLO mode to supercharge iteration speed. Just put safety limits (like read-only access to prod data, or use in a sandbox repo) and always review the final diff. It’s amazing for rapid prototyping or heavy refactoring where the AI can grunt through mundane fixes quickly.

- Educate and set expectations – Train your team on best practices with AI dev tools. Emphasize that AI output is not gospel – it’s a draft to refine. Encourage developers to view Cursor as a teammate who writes first drafts, not as an infallible code genie. This mindset keeps quality high.

- Measure and celebrate – Track metrics before and after adopting Cursor: e.g., how many bugs caught in pre-prod, time from spec to release, team overtime hours. We saw improvements in all the above, which helped affirm the decision. Celebrate the cool stuff – like that time the AI saved a Friday night deployment – to keep morale up.

Finally, consider this: What if your entire team could move 5× faster without anyone burning out?

That’s not a fanciful dream – we’re seeing hints of it with AI dev tools like Cursor in action. By embracing Cursor as an AI partner, you empower your engineers to focus on what really matters (creative problem solving, big-picture thinking) while the AI handles the repetitive and tedious bits. As an engineering manager, it’s a huge win to see the team shipping confidently and even having fun with this “junior dev who never sleeps.”

So, are you ready to onboard your own tireless AI developer? With the right setup and mindset, Cursor just might become the MVP of your engineering team’s productivity toolbox. Here’s to shipping better code, faster – and maybe turning those panicked “just ship it” Slack threads into a thing of the past!